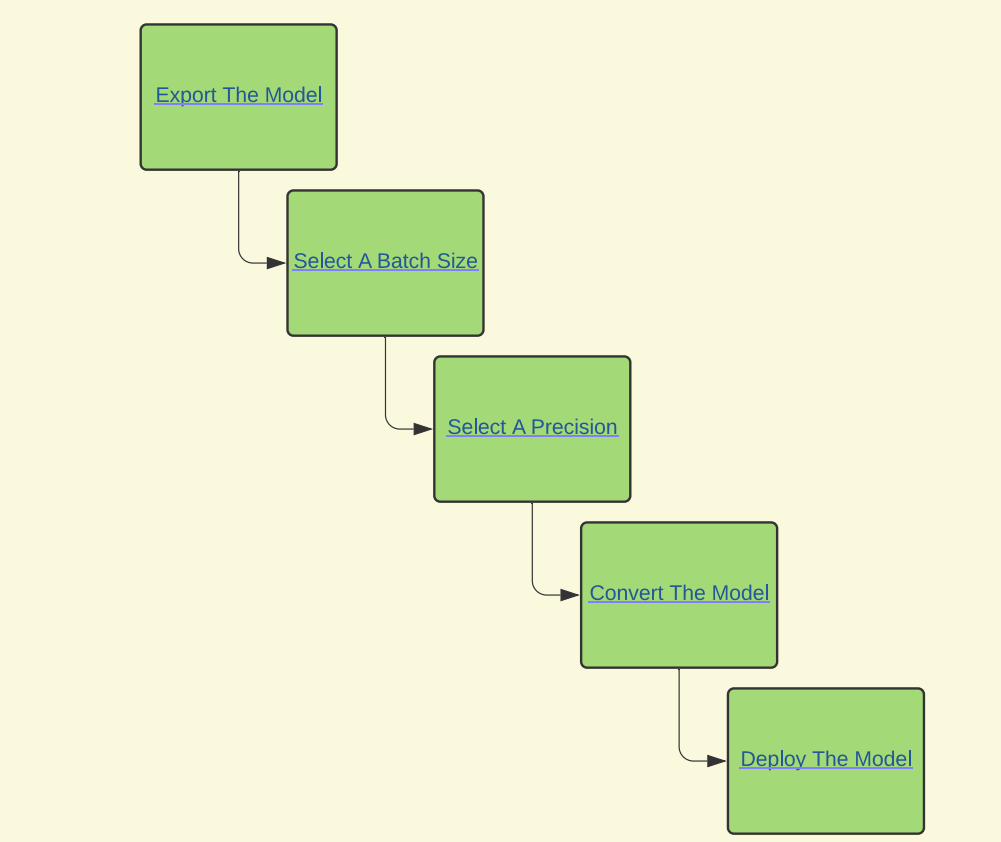

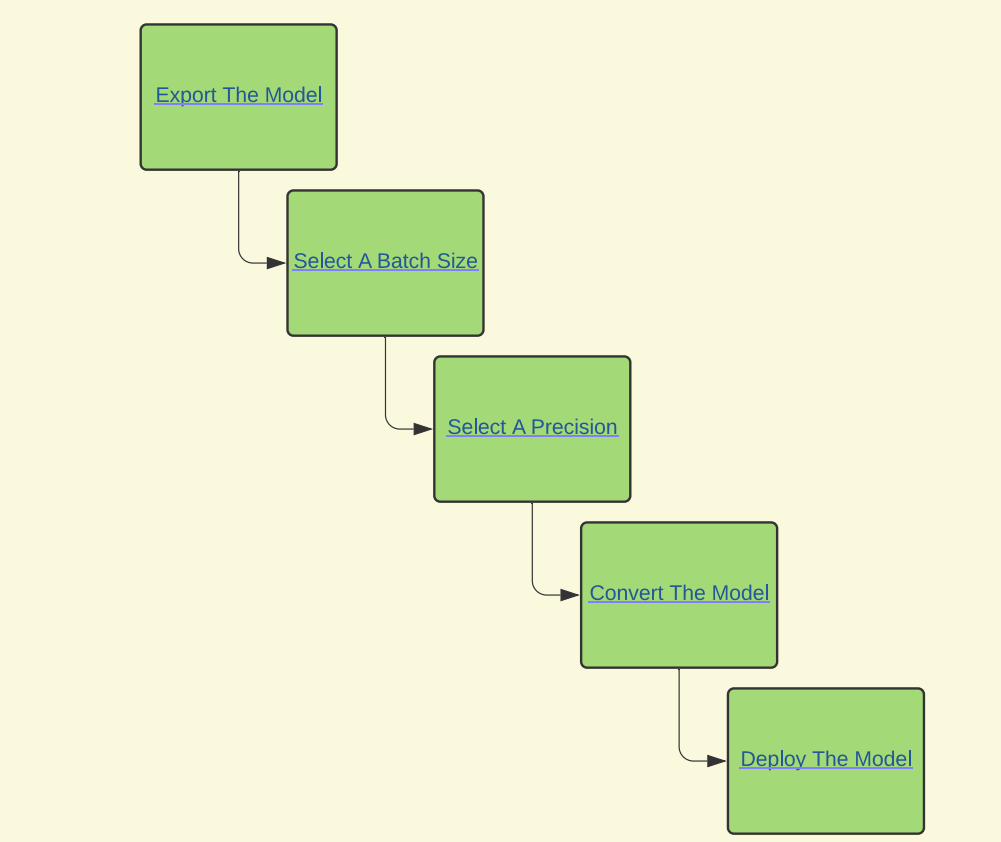

分为五步:

要将pytorch模型转为trt模型有两个方法:

当遇到trt不支持的op时,需要手写插件。

第二种方法更灵活(当然也更难)。

使用TensorRT runtime API 部署

The TensorRT runtime API allows for the lowest overhead and finest-grained control, but operators that TensorRT does not natively support must be implemented as plugins (a library of prewritten plugins is available here). The most common path for deploying with the runtime API is via ONNX export from a framework, which is covered in this guide in the following section.

可以部署为c++模型或者python模型。同样地,遇到不支持的op需要自己手写算子。