Guanzhi Wang 1 2, Yuqi Xie3, Yunfan Jiang4*, Ajay Mandlekar1*,

1NVIDIA, 2Caltech, 3UT Austin, 4Stanford, 5ASU

Equal contribution Equal advising

†

Corresponding authors: guanzhi@caltech.edu, dr.jimfan.ai@gmail.com

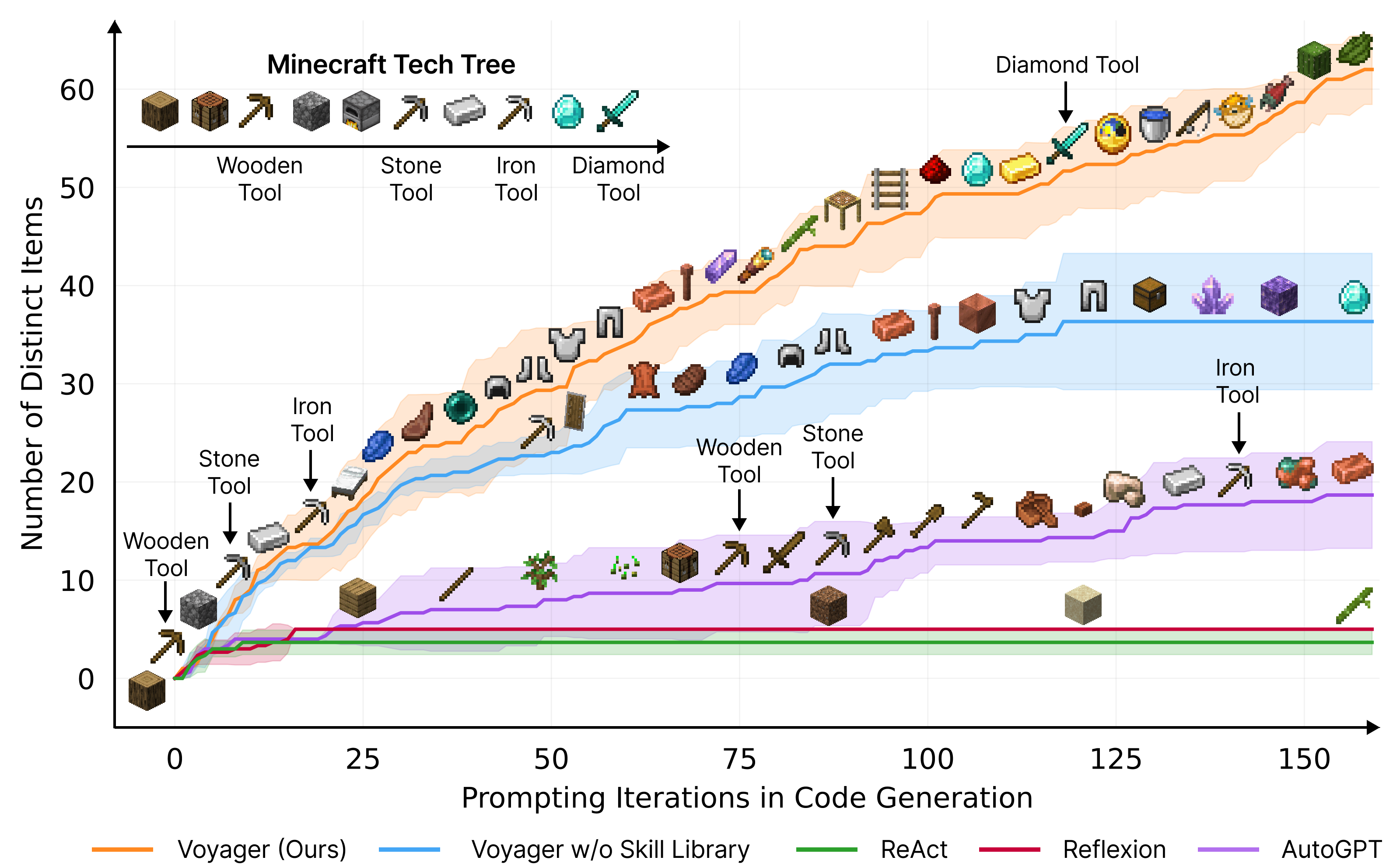

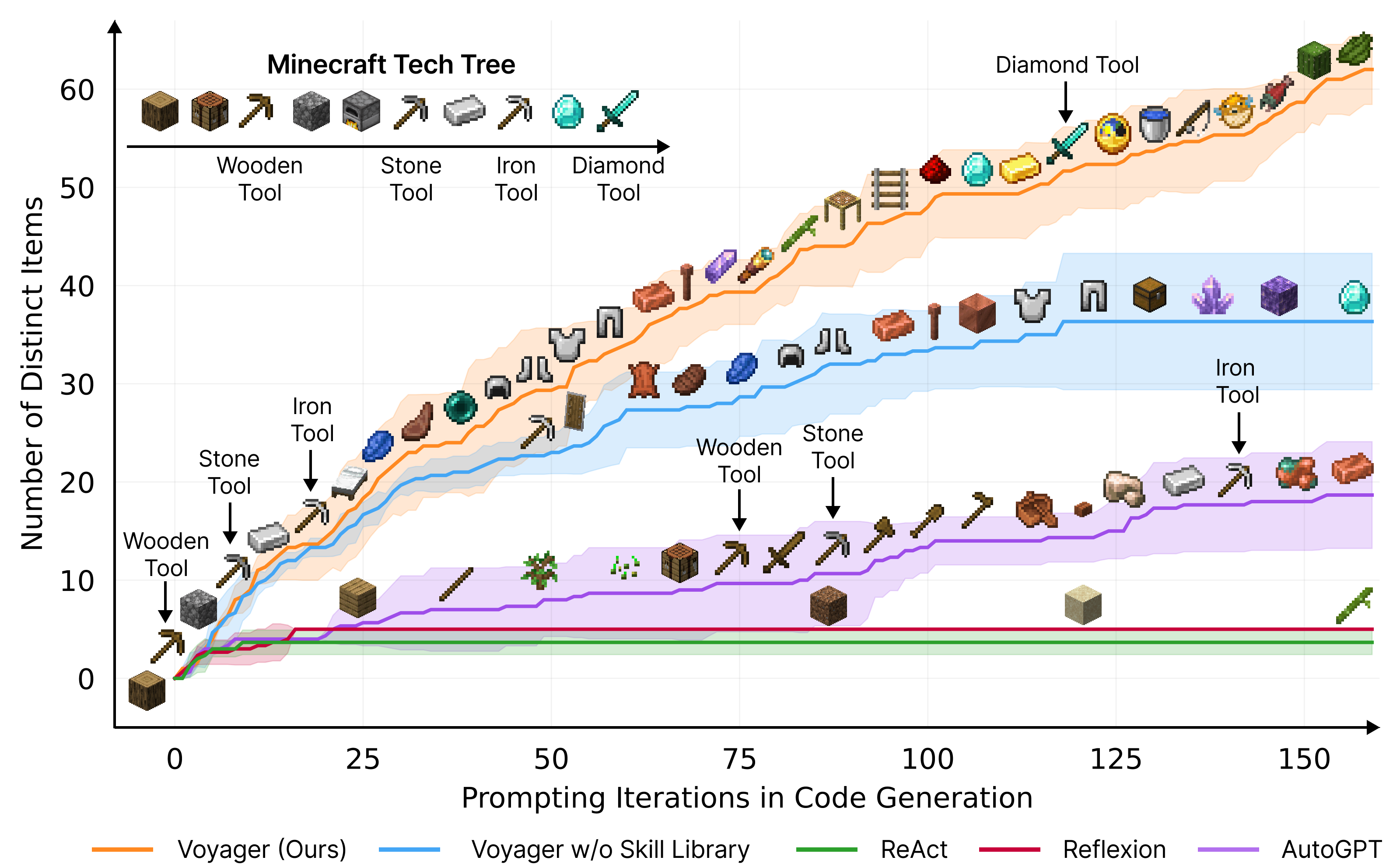

We introduce Voyager, the first LLM-powered embodied lifelong learning agent in Minecraft that continuously explores the world, acquires diverse skills, and makes novel discoveries without human intervention. Voyager consists of three key components: 1) an automatic curriculum that maximizes exploration, 2) an ever-growing skill library of executable code for storing and retrieving complex behaviors, and 3) a new iterative prompting mechanism that incorporates environment feedback, execution errors, and self-verification for program improvement. Voyager interacts with GPT-4 via blackbox queries, which bypasses the need for model parameter fine-tuning. The skills developed by Voyager are temporally extended, interpretable, and compositional, which compounds the agent's abilities rapidly and alleviates catastrophic forgetting. Empirically, Voyager shows strong in-context lifelong learning capability and exhibits exceptional proficiency in playing Minecraft. It obtains 3.3x more unique items, travels 2.3x longer distances, and unlocks key tech tree milestones up to 15.3x faster than prior SOTA. Voyager is able to utilize the learned skill library in a new Minecraft world to solve novel tasks from scratch, while other techniques struggle to generalize.

Voyager discovers new Minecraft items and skills continually by self-driven exploration, significantly outperforming the baselines.

Building generally capable embodied agents that continuously explore, plan, and develop new skills in open-ended worlds is a grand challenge for the AI community. Classical approaches employ reinforcement learning (RL) and imitation learning that operate on primitive actions, which could be challenging for systematic exploration, interpretability, and generalization. Recent advances in large language model (LLM) based agents harness the world knowledge encapsulated in pre-trained LLMs to generate consistent action plans or executable policies. They are applied to embodied tasks like games and robotics, as well as NLP tasks without embodiment. However, these agents are not lifelong learners that can progressively acquire, update, accumulate, and transfer knowledge over extended time spans.